Thursday, September 11, 2025

Monday, January 25, 2021

Kill sidecar container

apiVersion: batch/v1

kind: Job

metadata:

name: lifecycle-check1

spec:

ttlSecondsAfterFinished: 1

template:

spec:

containers:

- name: app1

image: artifactory.<URL>:5000/location/alpine:latest

command: ["sh", "-c"]

args:

- |

echo Start.

sleep 10

touch /message/processDone

exit 0

volumeMounts:

- mountPath: /message

name: test-volume

- name: app2

image: artifactory.<URL>:5000/location/alpine:latest

command: ["sh", "-c"]

lifecycle:

type: Sidecar

args:

- |

trap 'echo "Trapped SIGTERM"; sleep 3; echo "See you!!"; exit 0' TERM

echo Start.

while true

do

date

# sleep 20

if [ -f /message/processDone ]; then

echo "File found, exiting"

exit 0

else

echo "file not found yet"

fi

done

volumeMounts:

- mountPath: /message

name: test-volume

volumes:

- name: test-volume

emptyDir: {}

restartPolicy: Never

backoffLimit: 4

# terminationGracePeriodSeconds: 30

Saturday, July 13, 2019

Thursday, September 13, 2018

Hive query to list all the tables with partitions

Query 1:

Run in Hive Metastore Backend Oracle Database

select distinct TBLS.TBL_NAME, DBS.NAME--,PARTITIONS.PART_NAME,SDS.LOCATION,TBLS.DB_ID

from TBLS,PARTITIONS,DBS

where TBLS.DB_ID=249847

and TBLS.TBL_ID=PARTITIONS.TBL_ID

and DBS.DB_ID = TBLS.DB_ID

order by 1--,2;

Query 2:

select distinct TBLS.TBL_NAME, DBS.NAME--,PARTITIONS.PART_NAME,SDS.LOCATION,TBLS.DB_ID

from TBLS,PARTITIONS,DBS

where TBLS.TBL_ID=PARTITIONS.TBL_ID

and DBS.DB_ID = TBLS.DB_ID

order by 2,1;

Sunday, September 17, 2017

Namenode and Resource Manager High-Availability

3 Nodes:

Node Hostname IP Address Roles

1 namenode1.cluster.com 10.0.0.51 Namenode(Active), Resource Manager(Active), Zookeeper1, ZookeeperFailoverController, journalnode,historyserver

2 namenode2.cluster.com 10.0.0.52 Namenode(Standby), Resource Manager(Standby), Zookeeper2, ZookeeperFailoverController, journalnode, historyserver

3 datanode1.cluster.com 10.0.0.53 Datanode + NodeManager + Zookeeper3, journalnode

1 namenode1.cluster.com 10.0.0.51 Namenode(Active), Resource Manager(Active), Zookeeper1, ZookeeperFailoverController, journalnode,historyserver

2 namenode2.cluster.com 10.0.0.52 Namenode(Standby), Resource Manager(Standby), Zookeeper2, ZookeeperFailoverController, journalnode, historyserver

3 datanode1.cluster.com 10.0.0.53 Datanode + NodeManager + Zookeeper3, journalnode

NAMENODE1 - Login as Root:

[root@namenode1 ~]# ll

total 346884

-rw-r--r-- 1 root root 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

-rw-r--r-- 1 root root 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

-rw-r--r-- 1 root root 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[root@namenode1 ~]#

[root@namenode1 ~]#

[root@namenode1 ~]# cat /etc/hosts

10.0.0.51 namenode1.cluster.com namenode1

10.0.0.51 namenode2.cluster.com namenode2

10.0.0.53 datanode1.cluster.com datanode1

[root@namenode1 ~]# groupadd hadoop

[root@namenode1 ~]# useradd -g hadoop hadoop

[root@namenode1 ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: it is WAY too short

BAD PASSWORD: is a palindrome

Retype new password:

passwd: all authentication tokens updated successfully.

[root@namenode1 ~]# mv * /home/hadoop/

[root@namenode1 ~]#

[root@namenode1 ~]#

[root@namenode1 ~]# ll /home/hadoop/

total 346884

-rw-r--r-- 1 root root 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

-rw-r--r-- 1 root root 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

-rw-r--r-- 1 root root 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[root@namenode1 ~]#

[root@namenode1 ~]# chown -R hadoop:hadoop /home/hadoop/*

[root@namenode1 ~]#

[root@namenode1 ~]# ll /home/hadoop/

total 346884

-rw-r--r-- 1 hadoop hadoop 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

-rw-r--r-- 1 hadoop hadoop 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

-rw-r--r-- 1 hadoop hadoop 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[root@namenode1 ~]#

[root@namenode1 ~]# su - hadoop

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ll

total 346884

-rw-r--r-- 1 hadoop hadoop 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

-rw-r--r-- 1 hadoop hadoop 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

-rw-r--r-- 1 hadoop hadoop 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[hadoop@namenode1 ~]$

Extract all the files:

[hadoop@namenode1 ~]$ tar zxvf hadoop-2.6.0.tar.gz

[hadoop@namenode1 ~]$ tar zxvf jdk-7u75-linux-x64.tar.gz

[hadoop@namenode1 ~]$ tar zxvf zookeeper-3.4.6.tar.gz

[hadoop@namenode1 ~]$ ll

total 346896

drwxr-xr-x 9 hadoop hadoop 4096 Nov 14 2014 hadoop-2.6.0

-rw-r--r-- 1 hadoop hadoop 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

drwxr-xr-x 8 hadoop hadoop 4096 Dec 19 2014 jdk1.7.0_75

-rw-r--r-- 1 hadoop hadoop 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

drwxr-xr-x 10 hadoop hadoop 4096 Feb 20 2014 zookeeper-3.4.6

-rw-r--r-- 1 hadoop hadoop 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[hadoop@namenode1 ~]$

Create sym links

[hadoop@namenode1 ~]$ ln -s zookeeper-3.4.6 zookeeper

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ln -s hadoop-2.6.0 hadoop

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ln -s jdk1.7.0_75 jdk

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ll

total 346896

lrwxrwxrwx 1 hadoop hadoop 12 Aug 9 15:59 hadoop -> hadoop-2.6.0

drwxr-xr-x 9 hadoop hadoop 4096 Nov 14 2014 hadoop-2.6.0

-rw-r--r-- 1 hadoop hadoop 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

lrwxrwxrwx 1 hadoop hadoop 11 Aug 9 15:59 jdk -> jdk1.7.0_75

drwxr-xr-x 8 hadoop hadoop 4096 Dec 19 2014 jdk1.7.0_75

-rw-r--r-- 1 hadoop hadoop 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

lrwxrwxrwx 1 hadoop hadoop 15 Aug 9 15:59 zookeeper -> zookeeper-3.4.6

drwxr-xr-x 10 hadoop hadoop 4096 Feb 20 2014 zookeeper-3.4.6

-rw-r--r-- 1 hadoop hadoop 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[hadoop@namenode1 ~]$

Edit .bash_profile

[hadoop@namenode1 ~]$ vim .bash_profile

HADOOP_HOME=/home/hadoop/hadoop

JAVA_HOME=/home/hadoop/jdk

ZOOKEEPER_HOME=/home/hadoop/zookeeper

PATH=$PATH:$HOME/bin:$HADOOP_HOME:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$JAVA_HOME:$JAVA_HOME/bin:$ZOOKEEPER_HOME:$ZOOKEEPER_HOME/bin

export PATH

~

[hadoop@namenode1 ~]$ source .bash_profile

Generate ssh key pair - enable passwordless ssh from nn1 to nn2 and nn2 to nn2 (for ssh fencing)

[hadoop@namenode1 ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

ee:5c:9f:d6:a6:ad:f5:6d:f6:8f:db:41:aa:26:3f:f6 hadoop@namenode1.cluster.com

The key's randomart image is:

+--[ RSA 2048]----+

| |

| |

| |

| |

| S . |

| . o |

| . . o.. |

| o o =o+o+=|

| o =+BE++O|

+-----------------+

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ssh-copy-id -i .ssh/id_rsa.pub 10.0.0.51

The authenticity of host '10.0.0.51 (10.0.0.51)' can't be established.

RSA key fingerprint is b1:b3:d8:e3:e2:36:75:ae:6a:8b:0a:ce:85:7b:ce:2f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.51' (RSA) to the list of known hosts.

hadoop@10.0.0.51's password:

Now try logging into the machine, with "ssh '10.0.0.51'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ssh 10.0.0.51

[hadoop@namenode1 ~]$ logout

Connection to 10.0.0.51 closed.

[hadoop@namenode1 ~]$

Change the permissions of files in .ssh folder

[hadoop@namenode1 ~]$ ll -a

total 346924

drwx------ 6 hadoop hadoop 4096 Aug 9 16:03 .

drwxr-xr-x. 3 root root 4096 Aug 9 15:57 ..

-rw-r--r-- 1 hadoop hadoop 18 Dec 2 2011 .bash_logout

-rw-r--r-- 1 hadoop hadoop 384 Aug 9 16:02 .bash_profile

-rw-r--r-- 1 hadoop hadoop 124 Dec 2 2011 .bashrc

lrwxrwxrwx 1 hadoop hadoop 12 Aug 9 15:59 hadoop -> hadoop-2.6.0

drwxr-xr-x 9 hadoop hadoop 4096 Nov 14 2014 hadoop-2.6.0

-rw-r--r-- 1 hadoop hadoop 195257604 May 12 21:04 hadoop-2.6.0.tar.gz

lrwxrwxrwx 1 hadoop hadoop 11 Aug 9 15:59 jdk -> jdk1.7.0_75

drwxr-xr-x 8 hadoop hadoop 4096 Dec 19 2014 jdk1.7.0_75

-rw-r--r-- 1 hadoop hadoop 142245547 May 12 21:04 jdk-7u75-linux-x64.tar.gz

drwx------ 2 hadoop hadoop 4096 Aug 9 16:03 .ssh

-rw------- 1 hadoop hadoop 952 Aug 9 16:03 .viminfo

lrwxrwxrwx 1 hadoop hadoop 15 Aug 9 15:59 zookeeper -> zookeeper-3.4.6

drwxr-xr-x 10 hadoop hadoop 4096 Feb 20 2014 zookeeper-3.4.6

-rw-r--r-- 1 hadoop hadoop 17699306 Nov 6 2014 zookeeper-3.4.6.tar.gz

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ll -a .ssh/

total 24

drwx------ 2 hadoop hadoop 4096 Aug 9 16:03 .

drwx------ 6 hadoop hadoop 4096 Aug 9 16:03 ..

-rw------- 1 hadoop hadoop 404 Aug 9 16:03 authorized_keys

-rw------- 1 hadoop hadoop 1675 Aug 9 16:03 id_rsa

-rw-r--r-- 1 hadoop hadoop 404 Aug 9 16:03 id_rsa.pub

-rw-r--r-- 1 hadoop hadoop 394 Aug 9 16:03 known_hosts

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ chmod 600 .ssh/*

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ll -a .ssh/

total 24

drwx------ 2 hadoop hadoop 4096 Aug 9 16:03 .

drwx------ 6 hadoop hadoop 4096 Aug 9 16:03 ..

-rw------- 1 hadoop hadoop 404 Aug 9 16:03 authorized_keys

-rw------- 1 hadoop hadoop 1675 Aug 9 16:03 id_rsa

-rw------- 1 hadoop hadoop 404 Aug 9 16:03 id_rsa.pub

-rw------- 1 hadoop hadoop 394 Aug 9 16:03 known_hosts

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

CONFIGURATIONS

[hadoop@namenode1 ~]$ vim hadoop/etc/hadoop/hadoop-env.sh

JAVA_HOME=/home/hadoop/jdk

[hadoop@namenode1 ~]$ vim hadoop/etc/hadoop/core-site.xml

[hadoop@namenode1 ~]$ vim hadoop/etc/hadoop/hdfs-site.xml

[hadoop@namenode1 ~]$ vim hadoop/etc/hadoop/slaves

10.0.0.53

[hadoop@namenode1 ~]$ cp -v zookeeper/conf/zoo_sample.cfg zookeeper/conf/zoo.cfg

`zookeeper/conf/zoo_sample.cfg' > `zookeeper/conf/zoo.cfg'

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ vim zookeeper/conf/zoo.cfg

dataDir=/home/hadoop/data/zookeeper

server.1=10.0.0.51:2888:3888

server.2=10.0.0.52:2888:3888

server.3=10.0.0.53:2888:3888

[hadoop@namenode1 ~]$ mkdir -p data/zookeeper

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ touch data/zookeeper/myid

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ll data/zookeeper/myid

-rw-rw-r-- 1 hadoop hadoop 0 Aug 9 16:20 data/zookeeper/myid

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ vim data/zookeeper/myid

[hadoop@namenode1 ~]$ cat data/zookeeper/myid

1

[hadoop@namenode1 ~]$

YARN – Resource Manager High Availability CONFIGURATIONS

Edit mapred-site.xml = on 51

[hadoop@namenode1~]$cp -v hadoop/etc/hadoop/mapred-site.xml.template hadoop/etc/hadoop/mapred-site.xml

`hadoop/etc/hadoop/mapred-site.xml.template' -> `hadoop/etc/hadoop/mapred-site.xml'

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ vim hadoop/etc/hadoop/mapred-site.xml

RM CONFIGURATIONS

Run commands ON 51

[hadoop@namenode1 ~]$ for i in {1..3}; do ssh 10.0.0.5$i "hostname;jdk/bin/jps;echo -e '\n'";done

namenode1.cluster.com

1433 Jps

namenode2.cluster.com

1399 Jps

datanode1.cluster.com

1386 Jps

[hadoop@namenode1 ~]$

In Namenode 2

[hadoop@namenode2 ~]$ vim data/zookeeper/myid

[hadoop@namenode2 ~]$ cat data/zookeeper/myid

2

[hadoop@namenode2 ~]$

In Datanode1:

[hadoop@datanode1 ~]$ vim data/zookeeper/myid

[hadoop@datanode1 ~]$ cat data/zookeeper/myid

3

[hadoop@datanode1 ~]$

Start the daemons:

[hadoop@namenode1 ~]$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-journalnode-namenode1.cluster.com.out

[hadoop@namenode1 ~]$ ssh namenode2

Last login: Sun Aug 9 16:40:23 2015 from 10.0.0.51

[hadoop@namenode2 ~]$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-journalnode-namenode2.cluster.com.out

[hadoop@namenode2 ~]$ ssh datanode1

Last login: Sun Aug 9 16:40:56 2015 from 10.0.0.52

[hadoop@datanode1 ~]$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-journalnode-datanode1.cluster.com.out

[hadoop@datanode1 ~]$ logout

Connection to datanode1 closed.

[hadoop@namenode2 ~]$ logout

Connection to namenode2 closed.

[hadoop@namenode1 ~]$ for i in {1..3}; do ssh 10.0.0.5$i "hostname;jdk/bin/jps;echo -e '\n'";done

namenode1.cluster.com

1484 JournalNode

1543 Jps

namenode2.cluster.com

1544 Jps

1486 JournalNode

datanode1.cluster.com

1472 JournalNode

1529 Jps

[hadoop@namenode1 ~]$

On Namenode1:

[hadoop@namenode1 ~]$ hdfs namenode -format

[hadoop@namenode1 ~]$ hadoop-daemon.sh start namenode

starting namenode, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-namenode-namenode1.cluster.com.out

[hadoop@namenode1 ~]$ jps

1714 Jps

1484 JournalNode

[hadoop@namenode1 ~]$

On namenode 2:

[hadoop@namenode1 ~]$ ssh namenode2

Last login: Sun Aug 9 16:41:53 2015 from 10.0.0.51

[hadoop@namenode2 ~]$

[hadoop@namenode2 ~]$ hdfs namenode -bootstrapStandby

=====================================================

About to bootstrap Standby ID namenode2 from:

Nameservice ID: ha-cluster

Other Namenode ID: namenode1

Other NN's HTTP address: http://10.0.0.51:50070

Other NN's IPC address: namenode1.cluster.com/10.0.0.51:8020

Namespace ID: 682278749

Block pool ID: BP-1244531934-10.0.0.51-1439118899139

Cluster ID: CID-96aa1e31-2ab9-4af5-87b2-0e20d1910ca7

Layout version: -60

=====================================================

[hadoop@namenode2 ~]$ hadoop-daemon.sh start namenode

starting namenode, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-namenode-namenode2.cluster.com.out

[hadoop@namenode2 ~]$ jps

1703 Jps

1656 NameNode

1486 JournalNode

[hadoop@namenode2 ~]$

[hadoop@namenode1 ~]$ for i in {1..3}; do ssh 10.0.0.5$i "hostname;jdk/bin/jps;echo -e '\n'";done

namenode1.cluster.com

1644 NameNode

1781 Jps

1484 JournalNode

namenode2.cluster.com

1656 NameNode

1486 JournalNode

1755 Jps

datanode1.cluster.com

1472 JournalNode

1574 Jps

[hadoop@namenode1 ~]$

Start the zookeeper servers:

[hadoop@namenode1 ~]$ zkServer.sh start

JMX enabled by default

Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ ssh namenode2

Last login: Sun Aug 9 16:47:09 2015 from 10.0.0.51

[hadoop@namenode2 ~]$ zkServer.sh start

JMX enabled by default

Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@namenode2 ~]$

[hadoop@namenode2 ~]$ ssh datanode1

Last login: Sun Aug 9 16:48:22 2015 from 10.0.0.52

[hadoop@datanode1 ~]$ zkServer.sh start

JMX enabled by default

Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@datanode1 ~]$

[hadoop@datanode1 ~]$ logout

Connection to datanode1 closed.

[hadoop@namenode2 ~]$ logout

Connection to namenode2 closed.

[hadoop@namenode1 ~]$

[hadoop@namenode1 ~]$ for i in {1..3}; do ssh 192.168.0.5$i "hostname;jdk/bin/jps;echo -e '\n'";done

namenode1.cluster.com

1802 QuorumPeerMain

1644 NameNode

1851 Jps

1484 JournalNode

namenode2.cluster.com

1656 NameNode

1802 QuorumPeerMain

1845 Jps

1486 JournalNode

datanode1.cluster.com

1613 QuorumPeerMain

1472 JournalNode

1649 Jps

[hadoop@namenode1 ~]$

Start datanode:

[hadoop@namenode1 ~]$ ssh datanode1

The authenticity of host 'datanode1 (10.0.0.53)' can't be established.

RSA key fingerprint is b1:b3:d8:e3:e2:36:75:ae:6a:8b:0a:ce:85:7b:ce:2f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'datanode1' (RSA) to the list of known hosts.

Last login: Sun Aug 9 16:51:34 2015 from 10.0.0.52

[hadoop@datanode1 ~]$

[hadoop@datanode1 ~]$

[hadoop@datanode1 ~]$ hadoop-daemon.sh start datanode

starting datanode, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-datanode-datanode1.cluster.com.out

[hadoop@datanode1 ~]$

[hadoop@datanode1 ~]$

[hadoop@datanode1 ~]$ jps

1613 QuorumPeerMain

1732 Jps

1472 JournalNode

1690 DataNode

[hadoop@datanode1 ~]$

Format Zookeeper from NAMENODE1:

[hadoop@namenode1 ~]$ hdfs zkfc -formatZK

15/08/09 17:01:34 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/ha-cluster in ZK.

Start zookeeper failover controller on both the namenodes:

[hadoop@namenode1 ~]$ hadoop-daemon.sh start zkfc

starting zkfc, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-zkfc-namenode1.cluster.com.out

[hadoop@namenode1 ~]$ ssh namenode2

Last login: Sun Aug 9 17:01:03 2015 from 10.0.0.51

[hadoop@namenode2 ~]$ hadoop-daemon.sh start zkfc

starting zkfc, logging to /home/hadoop/hadoop-2.6.0/logs/hadoop-hadoop-zkfc-namenode2.cluster.com.out

[hadoop@namenode2 ~]$ logout

Connection to namenode2 closed.

[hadoop@namenode1 ~]$ for i in {1..3}; do ssh 192.168.0.5$i "hostname;jdk/bin/jps;echo -e '\n'";done

namenode1.cluster.com

2287 DFSZKFailoverController

2370 Jps

1802 QuorumPeerMain

1644 NameNode

1484 JournalNode

namenode2.cluster.com

2186 DFSZKFailoverController

1656 NameNode

1802 QuorumPeerMain

1486 JournalNode

2243 Jps

datanode1.cluster.com

1958 Jps

1613 QuorumPeerMain

1472 JournalNode

1690 DataNode

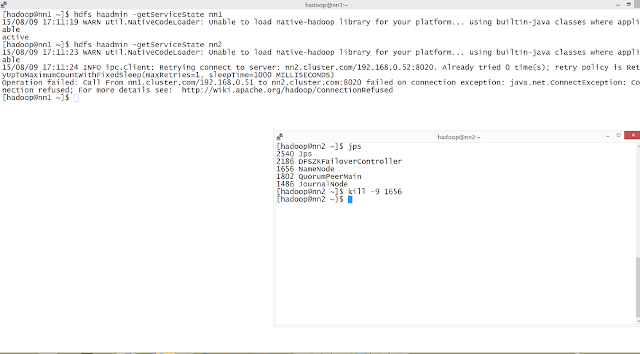

TESTING NN HA

Start Resource manager daemons

On NAMENODE1:

[hadoop@namenode1 ~]$ yarn-daemon.sh start resourcemanager

On NAMENODE2:

[hadoop@namenode2 ~]$ yarn-daemon.sh start resourcemanager

On DATANODE1:

[hadoop@namenode1 ~]$ yarn-daemon.sh start nodemanager

[hadoop@namenode1 ~]$ yarn rmadmin -getServiceState rm1

[hadoop@namenode1 ~]$ yarn rmadmin -getServiceState rm2

Labels:

hadoop,

high availability,

namenode,

namenode HA,

yarn,

YARN HA

Subscribe to:

Comments (Atom)

.jpeg)

.jpeg)

.jpeg)